A Ground-breaking Milestone: A PhD of LT Achieves SOTA of AI for Materials Science by Developing the Cutting-Edge LLM DARWIN with Research Partners, Soaring to the Top of Two MatBench Leaderboards

WAN Yuwei, PhD student and recipient of HK Tech 300 Seed Fund, from the Department of Linguistics and Translation (LT), City University of Hong Kong, leads a team of research partners from GreenDynamics and UNSW Sydney

(#19 QS World University Rankings 2024) to develop a cutting-edge large language model (LLM), called DARWIN, achieving the state-of-the-art (SOTA) performance in two typical prediction tasks in the field of AI for Materials Science (AI4Materials), namely, Experimental Band Gap Prediction and Classification of Metallicity.

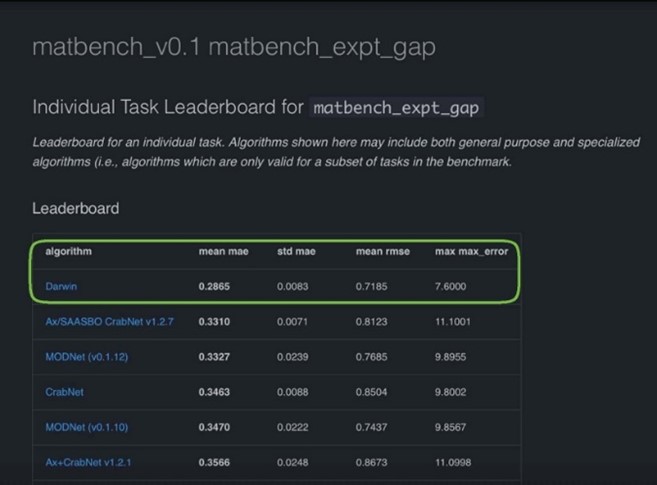

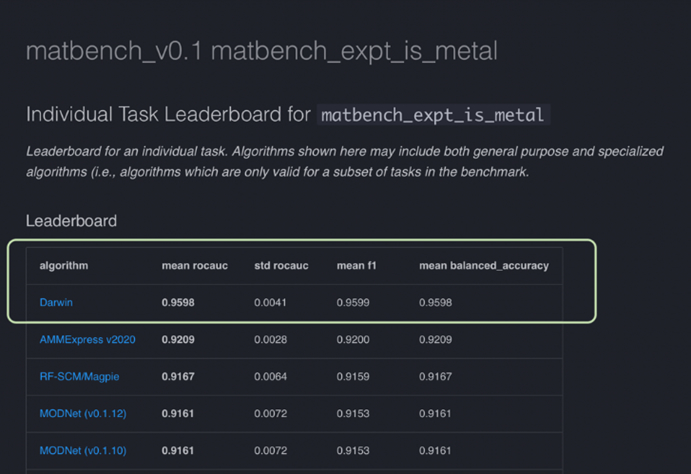

Surpassing all other machine learning (ML) models in this international competition, this fine-tuned LLM for domain-specific applications has soared to the top of two MatBench Leaderboards

(https://matbench.materialsproject.org/Leaderboards%20Per-Task/matbench_v0.1_matbench_expt_gap and

https://matbench.materialsproject.org/Leaderboards%20Per-Task/matbench_v0.1_matbench_expt_is_metal).

It has garnered significant recognition in MatBench, the foremost platform in the world for benchmarking SOTA ML algorithms predicting diverse properties of solid materials. Operated by Lawrence Berkeley National Lab and hosted by the Materials Project, MatBench stands as the most authoritative yardstick for excellence in AI4Materials. Despite the highly competitive landscape, DARWIN excels in demonstrating exceptional prowess and innovation, successfully setting new benchmarks for property prediction.

Yuwei graduated from BALLA of LT in 2019. During her undergraduate studies, she developed a strong interest in Computational Linguistics, mostly owing to the influence of the then course leader Professor KIT Chun Yu. Also following his advice for a thorough mastery of the most rapidly growing technologies in the frontier of the field, she went to Johns Hopkins University for an MSE in Computer Science before returning CityU for her PhD studies. As one of the best PhDs in Professor Kit’s group, Yuwei pursues not only excellence in learning and research but also successes in international cooperation, knowledge transfer, and fund-raising for research of her interest and also for her entrepreneur career. As co-founder of Green Dynamics Ltd., she secured start-up capital from the HK Tech 300 Seed Fund in 2022 and more funding from HKSTP Ideation Program in 2023 as Person in Charge (PIC), and also played a key role in attracting HK$3M venture capital recently.

Without doubt, however, her greatest academic merit is the development of DARWIN, for which the meticulous fine-tuning process involved over 60,000 data points, imposing a tremendous demand of factual correctness besides data size. Introducing the Scientific Instruction Generation (SIG) model to the fine-tuning for DARWIN, she has successfully automated instruction generation from scientific texts for the very first time, making a quantum leap towards high efficiency and accuracy in LLM tuning for domain-specific applications. It is a genuine commitment to multi-task training to have led to a breakthrough that can accommodate the profound interconnections between ML models for various scientific tasks, by virtue of its versatility and effectiveness. With over 120 stars of GitHub repository, DARWIN's success is magnified by its fitness to accomplish LLM tuning for multi-tasks with a single model, going beyond the norm of training dedicated models for individual tasks one by one. This streamlined approach not only enhances the efficiency of LLM tuning but also manifests its power and adaptability in various applications. More info about DARWIN's design and potentials is available at arXiv (https://arxiv.org/abs/2308.13565v1) and GitHub (https://github.com/MasterAI-EAM/Darwin)

According to Yuwei, DARWIN isn't just another AI model; it reflects a paradigm shift in natural science by utilization of AI for rapid advances. Utilizing open-source LLMs to amalgamate both structured and unstructured scientific knowledge from public datasets and available literature, it cannot only be applied to specific tasks in materials science but also be tailored for physics, chemistry, and other subject fields. Since November 2023, Yuwei has published four SCI Q1 articles, three as co-first author in academic journals of very high impact factors (i.e., 5.74, 9.8 and 6.5), in addition to three international conference papers. Her recent success in research, re-engineering of LLM, business pursuit and high-quality publications showcase a model student’s exceptional engagement in developing and applying AI technologies to resolve practical problems at the frontiers of AI for Science in this new era of big data, which is typically featured by the emergence and popular applications of pre-trained and fine-tuned LLMs.